Artificial intelligence (AI) has made incredible strides in recent years, revolutionizing fields from healthcare to transportation. We’ve seen AI defeat world champions in complex games like Go, generate realistic images, and even write compelling prose. But despite these impressive achievements, AI is far from perfect. In fact, sometimes it can be hilariously, bafflingly wrong.

This post will dive into some of the most amusing and insightful AI failures, highlighting the limitations of current AI systems and reminding us that, for all their power, they can still make mistakes that even a child wouldn’t. We’ll explore misinterpretations of images, nonsensical text generation, and other instances where AI’s “intelligence” leaves much to be desired. So, buckle up and prepare for a chuckle as we explore the lighter side of AI’s shortcomings.

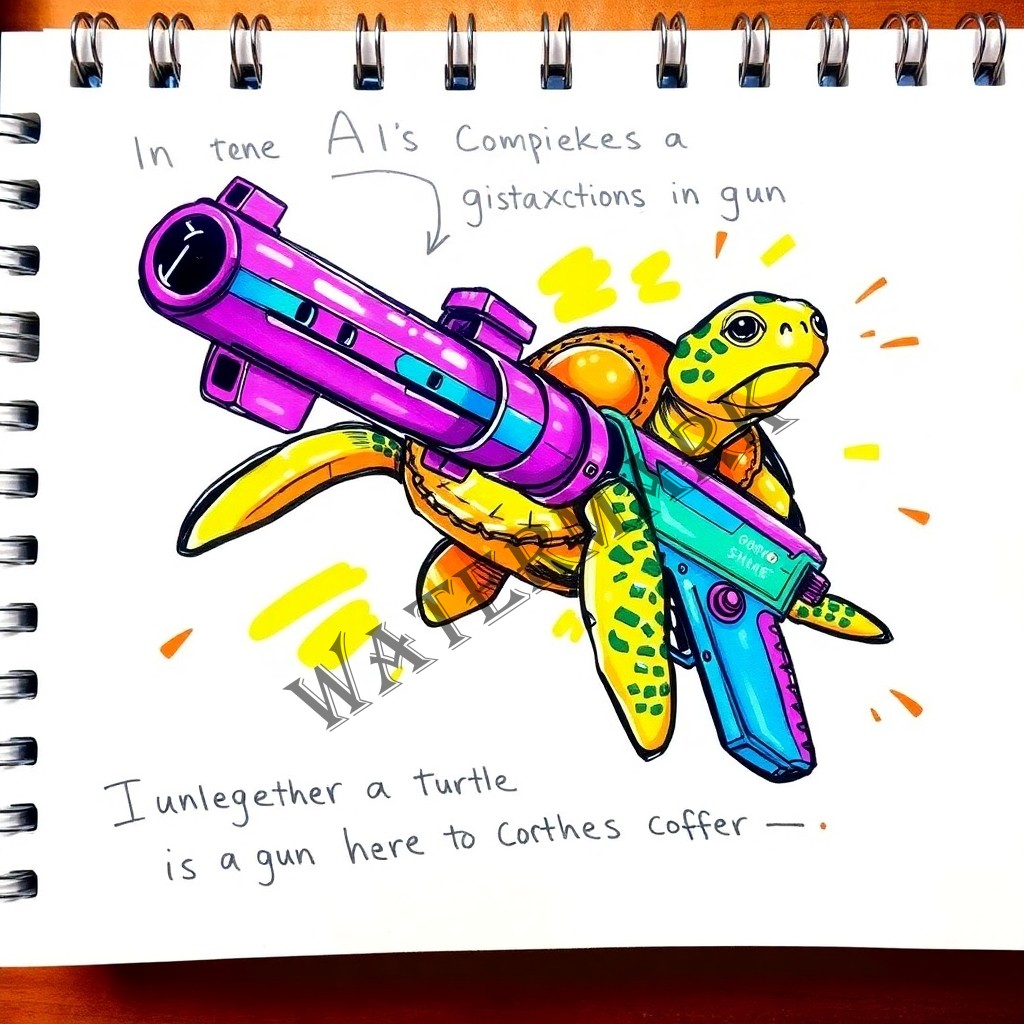

Fun Fact: Remember that time an AI thought a turtle was a rifle? AI can be hilariously wrong sometimes!

AI vs. Reality:

When Image Recognition Goes Off the Rails

One of the most common areas where AI stumbles is image recognition. While AI models can often identify objects with remarkable accuracy, they can also be easily fooled, leading to some comical misclassifications.

- The Turtle-Rifle Incident: In a now-famous example, researchers at Google demonstrated how a 3D-printed turtle, when viewed from certain angles, was consistently misidentified by a sophisticated AI as a rifle (Athalye et al., 2017). This wasn’t just a one-off glitch; the AI was confident in its incorrect classification, highlighting a fundamental vulnerability in how these systems perceive the world. This example perfectly illustrates how AI, despite its advanced algorithms, can make fundamental errors.

- Chihuahua or Muffin?: This internet meme went viral a few years ago, showcasing a grid of images alternating between adorable Chihuahuas and blueberry muffins. The joke was that they could look surprisingly similar to the untrained eye (or algorithm). While this wasn’t a formal AI experiment, it effectively illustrated how pattern recognition systems can struggle with subtle visual differences. The “Chihuahua or Muffin” meme is a humorous example of a general problem in pattern recognition, not just in AI, but for any system (including humans) that relies on visual cues. It is the ambiguity of images that caused the problem.

- The “Adversarial Patch” Problem: Researchers have also shown that by strategically placing small, specially designed stickers (known as “adversarial patches”) on objects, they can trick AI into misclassifying them. For example, a toaster could be labeled as a person or a stop sign as a speed limit sign (Brown et al., 2017). This has serious implications for the security of AI systems, particularly in applications like autonomous driving. The adversarial patch example also highlights a major security concern.

These are not isolated incidents. A study by MIT found that a “wide variety of state-of-the-art models are vulnerable to adversarial examples” (Madry et al., 2017). This means that even the most advanced AI systems are susceptible to these kinds of tricks.

Lost in Translation (and Logic):

AI’s Struggles with Language

AI’s difficulties aren’t limited to the visual realm. Natural language processing (NLP), the branch of AI that deals with understanding and generating human language, is another area ripe with humorous (and sometimes concerning) errors.

- The Nonsense Generator: Early versions of text-generating AI models, like GPT-2, were notorious for producing text that, while grammatically correct, was often utterly nonsensical. They could create long, flowing paragraphs that sounded impressive at first glance but, upon closer inspection, made no logical sense. A prime example is the “Unicorn” story generated by OpenAI’s GPT-2, which was withheld initially due to concerns about potential misuse. While impressive in its ability to mimic human writing style, the story itself was fantastical and illogical, showcasing the AI’s lack of real-world understanding (Radford et al., 2019). OpenAI’s rationale, as reported by The Guardian (Hern, 2019), was that the AI was “too good” and could be used for malicious purposes, like generating fake news. This raised ethical debates about the responsible release of powerful AI technologies.

- Translation Troubles: While vastly improved, machine translation still produces hilarious errors. A classic example is a sign in a Welsh supermarket that was meant to say, “No entry for heavy goods vehicles. Residential site only.” However, the Welsh translation provided by an automated system actually read, “I am not in the office at the moment. Send any work to be translated.” This highlights the challenges of capturing nuance and context in translation, especially for less common languages.

- Bias and Stereotypes: More concerningly, AI language models can also perpetuate harmful biases and stereotypes. These systems are trained on vast amounts of text data, much of which reflects existing societal biases. As a result, they can generate text that reinforces these biases, leading to unfair or discriminatory outcomes. This includes creating stereotypes about professions and gender or making incorrect correlations. For example, early word embedding models would often associate “doctor” with “male” and “nurse” with “female,” reflecting historical gender biases in these professions. This issue is well-documented in research by Bolukbasi et al. (2016), who found that these biases were deeply embedded in the data used to train these models.

When AI Tries to Be Creative (and Fails)

AI’s attempts at creativity often result in some of the most entertaining failures. While AI can now generate art, music, and even stories, the results are often bizarre, unpredictable, and unintentionally funny.

- The “Deep Dream” Nightmare: Google’s DeepDream, a program designed to enhance image patterns, became an internet sensation for producing surreal and often disturbing images. Feed it a picture of a landscape, and it might transform it into a hallucinatory scene filled with bizarre animal-like shapes and swirling patterns. While visually striking, these images are far from what most people would consider “art.” The resulting images were often described as “nightmarish” or “psychedelic,” as reported by many news outlets, including The Verge (Vincent, 2015). This highlighted the limitations of AI in understanding and replicating the aesthetic and emotional qualities of human art.

- AI-Generated Music: AI-composed music can range from intriguing to utterly unlistenable. While some AI systems can create somewhat pleasant melodies, they often lack human-composed music’s emotional depth and structure. The results can be jarring, repetitive, or simply strange. A notable example is the AI-generated “Daddy’s Car,” a Beatles-style song created by Sony CSL Research Laboratory. While it was an impressive technical feat, many listeners found the song to be unsettling and lacking in genuine musicality (Oremus, 2016).

- AI-Generated Scripts: In 2016, a short film called “Sunspring” was released, written entirely by an AI named Benjamin. The film’s dialogue was nonsensical and bizarre, leading to a viewing experience that was both confusing and hilarious. The film’s creators themselves described it as “totally incoherent” (Metz, 2016). The film quickly gained notoriety for its bizarre and nonsensical nature. This highlighted the vast gulf between AI’s ability to mimic language patterns and its ability to create meaningful, coherent narratives.

Why Does AI Make These Mistakes?

These examples raise an important question: why does AI, with all its computational power, make such seemingly silly mistakes? There are several key reasons:

- Lack of Common Sense: AI systems, especially those based on deep learning, are primarily pattern recognizers. They excel at finding correlations in data but lack the common sense reasoning abilities that humans possess. They don’t understand the underlying concepts behind the data they process, making them prone to errors when faced with situations that require even basic real-world knowledge.

- Data Dependency: AI models are heavily reliant on the data they are trained on. If the training data is incomplete, biased, or contains errors, the AI will inevitably reflect those flaws in its output. This is why AI systems can perpetuate stereotypes or fail to generalize to new situations.

- Adversarial Attacks: As we’ve seen, AI systems can be deliberately tricked by adversarial examples—carefully crafted inputs designed to exploit their weaknesses. This vulnerability highlights the need for more robust and secure AI systems.

- Limited Understanding of Context: AI often struggles with context. It can process individual words or images but has difficulty understanding the broader context in which they appear. This can lead to misinterpretations, especially in language processing.

The Future of AI: Learning from Mistakes

Despite these limitations, AI is constantly evolving. Researchers are actively working to address these challenges by:

- Developing more robust AI models: This includes creating models that are less susceptible to adversarial attacks and better at handling noisy or incomplete data.

- Incorporating common sense reasoning: Researchers are exploring ways to imbue AI systems with a basic understanding of the world, allowing them to make more informed decisions.

- Improving data quality and diversity: Efforts are underway to create more comprehensive and representative datasets that can reduce bias and improve the overall performance of AI models.

- Focusing on explainability: Making AI systems more transparent and understandable is crucial for identifying and correcting errors. This involves developing methods for explaining why an AI made a particular decision.

Conclusion: Embracing the Imperfections

The examples of AI fails we’ve explored in this post serve as a valuable reminder that AI is not a magical solution to all problems. It is a powerful tool but one with significant limitations. By understanding these limitations and embracing the occasional hilarious mistake, we can develop a more realistic and nuanced perspective on AI’s capabilities and its role in our lives. These fails are not just funny anecdotes; they are valuable learning opportunities that can help us build better, more reliable, and ultimately more human-centered AI systems. As AI continues to evolve, we can expect more such instances of AI going wrong, providing us with both amusement and valuable insights into the nature of intelligence itself.

Additional Resources and References

- Athalye, A., Engstrom, L., Ilyas, A., & Kwok, K. (2017). Synthesizing robust adversarial examples. arXiv preprint arXiv:1707.07397.

- Bolukbasi, T., Chang, K. W., Zou, J. Y., Saligrama, V., & Kalai, A. T. (2016). Man is to computer programmer as woman is to homemaker? debiasing word embeddings. In Advances in neural information processing systems (pp. 4349-4357).

- Brown, T. B., Mané, D., Roy, A., Abadi, M., & Gilmer, J. (2017). Adversarial patch. arXiv preprint arXiv:1712.09665.

- Hern, A. (2019). New AI fake text generator may be too dangerous to release, say creators. The Guardian.

- Madry, A., Makelov, A., Schmidt, L., Tsipras, D., & Vladu, A. (2017). Towards deep learning models resistant to adversarial attacks. arXiv preprint arXiv:1706.06083.

- Metz, C. (2016). A movie written by AI is wonderfully bad. Wired.

- Oremus, W. (2016). This Pop Song Was Written by Artificial Intelligence. Slate.

- Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., & Sutskever, I. (2019). Language models are unsupervised multitask learners. OpenAI Blog, 1(8), 9.

- Vincent, J. (2015). Google’s artificial intelligence is dreaming, and it’s kind of terrifying. The Verge.

Leave a Reply