Artificial Intelligence (AI) has become an integral part of our lives, transforming everything from communication to work. However, the journey of AI began long before the modern-day tech boom. The early years of AI research, starting from the mid-20th century, were a period of imagination, experimentation, and groundbreaking discoveries that set the foundation for the intelligent systems we know today. Let’s journey back in time to explore the origins and critical milestones of AI’s early development.

The Concept of Machine Intelligence:

Roots in Philosophy

The idea of machine intelligence predates computers and digital systems. Ancient philosophers like Aristotle mused about the nature of reasoning and intelligence. In the 17th century, mathematicians like Gottfried Wilhelm Leibniz dreamed of creating a machine that could mimic human reasoning. Fast forward to the 20th century, as machines and early computers began to take shape, the idea of creating “thinking machines” became more feasible.

In 1950, British mathematician and logician Alan Turing published his famous paper, “Computing Machinery and Intelligence,” in which he asked, “Can machines think?” Turing proposed the now-famous “Turing Test” to determine whether a machine could exhibit human-like intelligence. This revolutionary concept set the stage for the next wave of AI research.

The Birth of Artificial Intelligence:

The Dartmouth Conference of 1956

The official birth of AI is often traced back to a historic event: the 1956 Dartmouth Conference. Organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, the conference gathered prominent thinkers to discuss the possibility of creating machines that could simulate aspects of human intelligence. McCarthy, a computer scientist, is credited with coining the term “artificial intelligence” during this conference.

The excitement at the Dartmouth Conference ignited a new field of study. The attendees believed machines could soon be programmed to solve complex problems, learn from experience, and improve their capabilities. This optimism led to a flurry of research grants and investments, propelling AI research forward.

Early AI Programs:

The Dawn of Machine Problem-Solving

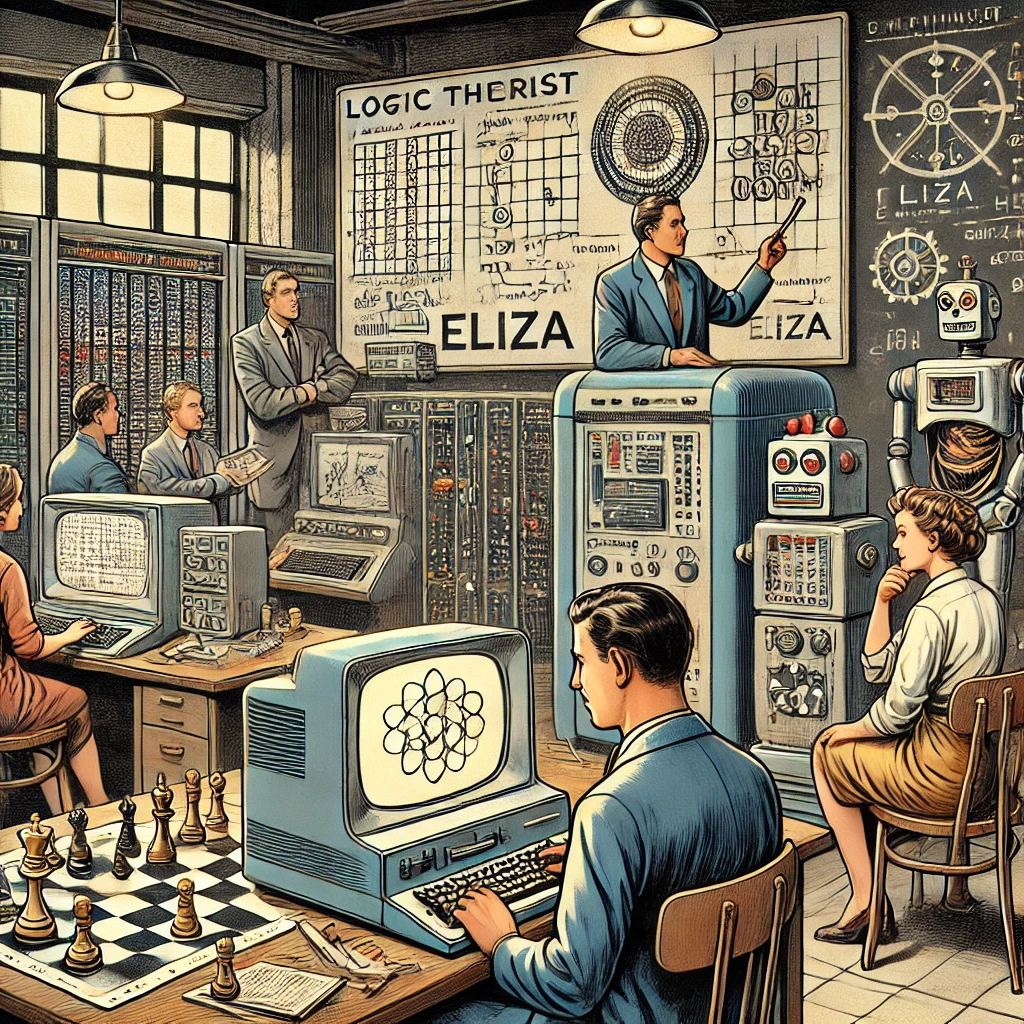

Following the Dartmouth Conference, researchers created some of the earliest AI programs, each designed to tackle specific problems. Two notable projects stand out from this period:

- Logic Theorist (1955-1956): Developed by Allen Newell and Herbert A. Simon, the Logic Theorist was an AI program that could prove mathematical theorems. Often considered the first “artificial intelligence” program, it used symbolic reasoning to replicate problem-solving processes. The Logic Theorist proved the viability of using computers to perform logical reasoning tasks and set the foundation for future AI research.

- The General Problem Solver (1957): Another milestone program created by Newell and Simon, the General Problem Solver (GPS), was designed to solve a variety of problems using a “means-ends” analysis approach. While it could only solve simple issues, GPS introduced the concept of heuristic search—a method that mimics human decision-making by finding shortcuts and approximations.

These programs showcased the potential of AI, demonstrating that machines could be designed to reason in ways that were once thought to be uniquely human.

Early Language and Learning Programs:

IBM and ELIZA

By the 1960s, researchers began exploring ways to develop AI systems to understand and generate human language. One of the early successes in this area was ELIZA, a program created by MIT researcher Joseph Weizenbaum in 1966. ELIZA was designed to simulate a psychotherapist and engage users in basic conversations by responding to their inputs with pre-programmed responses. Although simplistic, ELIZA highlighted the potential of natural language processing and became one of the first “chatbots.”

Around the same time, IBM was developing AI programs aimed at machine learning. Arthur Samuel, a computer scientist at IBM, created a checker-playing program that could learn from its mistakes. Samuel’s checkers program marked one of the first instances of “machine learning,” as it improved its strategies by learning from past games, paving the way for future learning-based systems.

The Rise and Fall of Early AI Optimism: AI Winters

As AI research progressed, there was immense optimism about what AI could achieve. The 1960s and early 1970s saw computer vision, language processing, and robotics breakthroughs. Governments and institutions invested heavily in AI, believing human-level AI was just around the corner. However, technology still needs to be ready to deliver these ambitious promises.

By the mid-1970s, progress in AI had slowed, and funding was drastically cut. The early limitations of AI systems, such as the inability to handle complex problems and the lack of computing power, led to what became known as the “AI Winter.” Researchers had overestimated the speed of progress, and funding agencies lost interest in supporting AI research.

The Foundations of Modern AI: 1980s Revival

Despite setbacks, AI research continued in niche areas, and the 1980s brought new hope. The revival began with the development of expert systems—AI systems designed to mimic the decision-making abilities of human experts in specific fields, like medical diagnosis and financial analysis. These systems, powered by extensive rule-based knowledge, were successfully deployed in industries, reigniting interest and funding in AI research.

This period also saw the growth of machine learning, where AI models could improve by identifying patterns within data, setting the stage for modern AI.

Legacy and Lessons from the Early Years of AI

Boundless ambition, imaginative ideas, and foundational achievements marked the early years of AI. These pioneers laid the groundwork for the neural networks, deep learning, and advanced AI systems we see today. Their achievements and lessons learned from periods of overhyped expectations and funding cuts shaped the field into a more sustainable, data-driven discipline.

Today, as AI enters our daily lives through virtual assistants, recommendation systems, and self-driving cars, it’s essential to appreciate the journey that began over 70 years ago. The visionaries of early AI may not have lived to realize their dreams fully, but their contributions continue to drive AI forward, transforming science fiction into reality.

Final Thoughts

The early years of AI represent a fascinating chapter of technological innovation and human imagination. The field has come a long way from Turing and McCarthy’s days, and thanks to their pioneering work, we continue to make incredible strides in artificial intelligence. As AI technology advances further, the lessons from the early years remind us to maintain a balanced perspective—celebrating progress while remaining grounded in the complexity of this remarkable field.

Leave a Reply