AI’s “Choose Your Fighter” Moment

Imagine you’re assembling a team for a trivia night. Instead of relying on a single know-it-all, you gather a group of specialists: a historian, a scientist, a pop culture guru, and a sports analyst. When a question arises, the right expert steps up to answer. This strategy leverages individual strengths, ensuring top performance across diverse topics.

In the realm of artificial intelligence, a similar strategy is employed through Mixture of Experts (MoE) models. These models consist of multiple specialized “expert” networks, each adept at handling specific types of inputs. A gating mechanism decides which experts to engage for a given task, optimizing efficiency and performance. This approach mirrors our trivia team analogy, where the model dynamically selects the most suitable experts based on the problem at hand.

But what makes MoE models particularly compelling in today’s AI landscape? How do they balance the trade-off between increased capacity and computational efficiency? And as we push the boundaries of model scalability, what challenges and philosophical questions arise regarding their deployment and ethical implications?

In this post, we’ll delve into the mechanics of MoE models, explore their real-world applications, and address these pressing questions. By the end, you’ll have a comprehensive understanding of why MoE models are gaining traction and how they might shape the future of artificial intelligence.

🧠 The Basics: What Is a Mixture of Experts?

Imagine you’re running a massive company with dozens of departments—each filled with experts in their field. But here’s the twist: when a new task comes in, you don’t need to involve every department. Instead, a clever assistant quickly figures out which few departments are best suited for the task and sends it their way. Fast, efficient, and smart.

This is, in a nutshell, the magic behind MoE models.

At a high level, an MoE model is a type of neural network architecture that uses multiple sub-models, or “experts,” and only activates a few of them at a time for any given input. This approach allows the model to scale up in size without proportionally increasing computation costs.

Rather than forcing a single model to learn everything, MoEs divide the labor—letting specialized parts of the model handle specific types of inputs.

🧩 Breaking Down the Components

Let’s get into how it actually works, step-by-step:

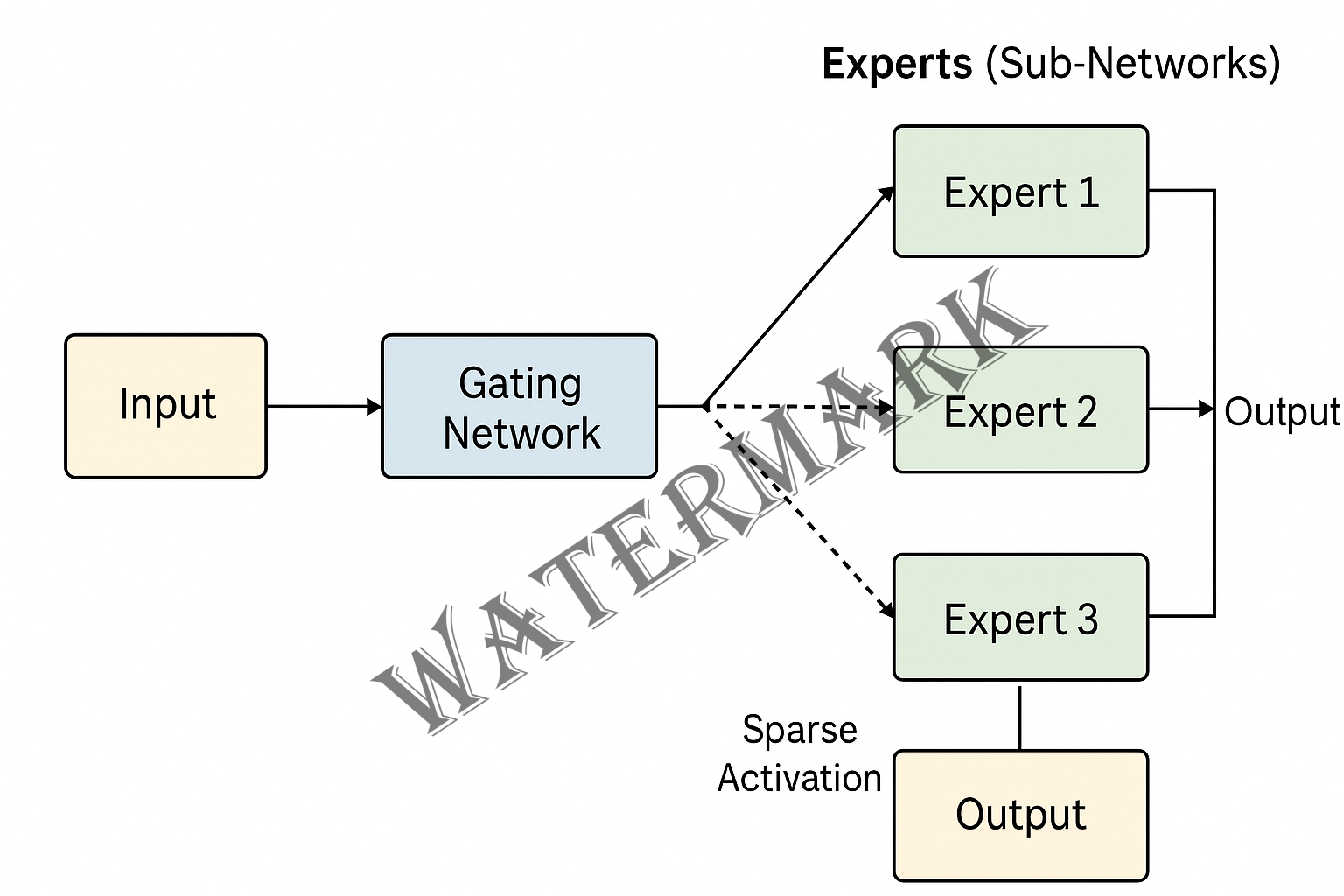

1. Experts (Sub-Networks)

- Think of each expert as a small neural network trained to handle certain types of data patterns.

- In a large MoE model, there might be dozens (or even thousands) of these expert networks.

- Each expert could become good at certain linguistic patterns, domains (like legal vs. medical), or even specific languages in multilingual models.

2. The Gating Network (The Decision-Maker)

- This is the traffic controller of the MoE.

- When a new input comes in (say, a sentence or image), the gating network analyzes it and decides which experts to activate.

- Typically, only 2 to 4 experts are activated per input, even if 64 or more exist. This is called sparse activation.

🔁 Example: Say the model is asked to translate a sentence from French to English. The gating network may activate one expert that specializes in French syntax and another that’s tuned for English semantics.

3. Sparse Activation: Efficiency is Key

- This is where MoE models shine. Unlike traditional models where every part of the network runs for every input, MoE activates only a fraction of the total model.

- This allows you to scale up the number of parameters (model size) significantly without exploding compute costs.

- Example: An MoE model might have 200 billion parameters, but only 20 billion are used for any given inference.

This is the core idea behind what’s often called compute-efficient scaling—you get more brains without needing more power for every task.

⚙️ Architectures & Implementation

Most MoE models today are based on Transformer architectures—the same family used in GPT, BERT, and other large language models.

Here’s how it usually plays out:

- In a Transformer layer, instead of having a fixed feed-forward layer, the MoE version replaces it with a set of expert feed-forward layers.

- A gating network scores the relevance of each expert to the current token (or chunk of input).

- The top-k experts are selected (usually Top-2), and their outputs are combined, typically via a weighted sum.

- Noise is sometimes added to the gating process during training to encourage load balancing—so no single expert gets overloaded.

🛠️ Popular Tools: Libraries like Google’s GShard, DeepMind’s SparTAN, and Meta’s Fairseq MoE help researchers build scalable MoE models.

The result? Instead of firing up all the experts every time (which would be slow and expensive), the model only activates the most relevant ones. This makes it much more efficient, especially as models scale to billions or even trillions of parameters.

Why is this important? Because training and running giant models like GPT-4 is insanely resource-intensive. MoE models let us have huge models with lots of expertise, but only use a small slice of them at any time—saving both time and money.

Here’s a metaphor: Imagine a hospital with 100 doctors on call. If every patient had to see all 100 doctors for every visit, it would be chaotic. Instead, MoE lets the hospital operate smarter—only the right specialists are called in for each case.

This dynamic expert selection is what makes MoE models exciting. They introduce specialization and modularity into AI in a way that mirrors how teams—and even the human brain—solve problems.

🌍 Real-World Examples

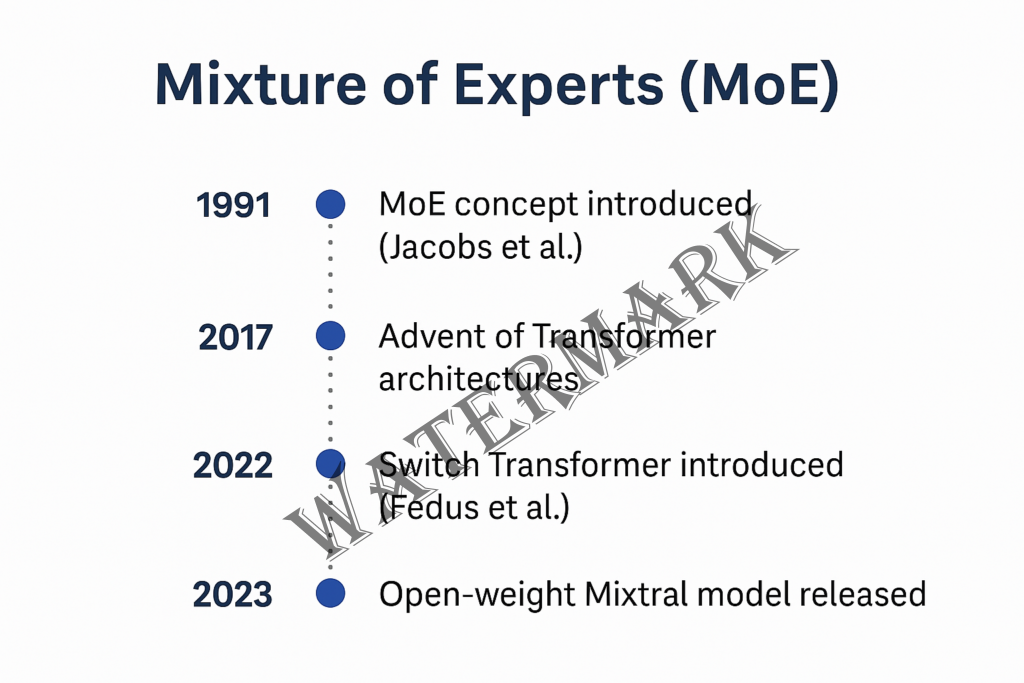

- Google’s Switch Transformer (Fedus et al., 2022): One of the most famous MoE models, scaling to 1.6 trillion parameters while keeping computation efficient.

- GLaM (Generalist Language Model) by Google AI: Achieved strong performance across benchmarks while only activating 8 out of 64 experts per layer.

- Meta’s Expert Choice Models: They’re experimenting with dynamic expert routing for better multilingual support.

These models demonstrate the real promise of MoE: more capable, scalable AI systems that don’t burn through compute unnecessarily.

🤔 Why This Matters (and a Little Philosophy…)

This all raises a fun philosophical question: Does intelligence need to be monolithic, or is it better when it’s distributed?

In nature, we see division of labor everywhere—from ant colonies to human brains. Maybe our AI systems are evolving similarly, moving from general-purpose intelligence to modular collectives of specialized reasoning.

And then there’s the ethics and transparency angle. If only part of a model is activated, how do we know which experts influenced the output? Can we audit decisions made by partial networks? Should we?

📢 Why is Everyone Talking About MoE Again?

Over the past few months, MoE models have made a full-blown comeback in AI circles—from research papers and blog posts to trending GitHub repos and startup hype decks. So, what’s with the buzz? Why is everyone talking about MoE again like it’s the hot new thing (even though it’s been around since the 1990s)?

Well, a few timely shifts in AI are bringing MoE architectures right back into the spotlight—and this time, they’re not just promising; they’re delivering. Let’s unpack why MoEs are everywhere right now.

🚀 1. Scaling AI Is Getting Ridiculously Expensive

Large Language Models (LLMs) like GPT-4, Claude, and Gemini are growing in size and capability—but so are the costs of training and running them. Serving dense models at scale eats up bandwidth, compute, and budget, making sustainable AI adoption a real challenge.

Enter MoE models: they can scale up to trillions of parameters while only using a small subset of them per input. That means you get massive model capacity without paying a massive compute bill every time a user asks, “What’s the weather like in a haiku?”

📰 Real-world spotlight: Google’s Switch Transformer and GLaM proved that MoEs could deliver performance comparable to massive dense models, but with a fraction of the compute load (Fedus et al., 2022).

🌍 2. AI Needs to Be Smarter—Not Just Bigger

In 2023 and 2024, the AI industry started shifting from “bigger is better” to “smarter is better.” With AI agents doing more complex things—coding, planning, multi-modal reasoning—the need for specialization became obvious.

MoEs are purpose-built for this: they allow different “experts” within the same model to focus on specific kinds of tasks or inputs. So when you ask a question about calculus, the model activates the math experts. If you need legal jargon explained, it activates language specialists trained in that domain.

This modular intelligence aligns perfectly with the growing movement toward agentic AI, where different subsystems or skills are orchestrated together dynamically.

📌 Buzz moment: Cognition Labs’ “Devin,” an AI software engineer, sparked debate around how AI systems manage modular reasoning. MoE is a natural fit for that kind of architecture.

🧰 3. Open-Source & Tooling Have Caught Up

MoEs aren’t new—but building and scaling them used to be painful. That’s changed. Toolkits like DeepSpeed-MoE (from Microsoft), Fairseq-MoE (Meta), and JAX + T5X from Google have made it easier to implement MoEs efficiently. Now, anyone with a decent GPU setup and some PyTorch chops can start experimenting.

Better tooling has lowered the barrier to entry, and open-source MoE models are proving you don’t need Google-scale infrastructure to get in the game.

🚀 Project in focus: Mixtral, an open-source MoE model released by Mistral AI, grabbed headlines in late 2023. It used sparse expert routing and outperformed much larger dense models in several benchmarks—all while keeping compute costs low.

🔍 4. MoEs Are Philosophically Interesting (and a Little Mysterious)

AI researchers love a good mystery, and MoEs raise some juicy philosophical questions.

If a model only uses part of its brain for each task:

- How do we interpret or audit those decisions?

- Which experts were involved in the outcome?

- Is it fair or transparent if only a few sub-networks shape an answer that looks like it came from the whole?

There are also concerns around fairness, bias concentration, and security. For instance, could a bad actor game the gating mechanism to trigger a specific expert with a known weakness? These questions are drawing MoEs into not just research papers, but also ethics discussions and regulatory interest.

💡 TL;DR – Why MoEs Are Trending Again

MoEs are having a moment—and not just because of novelty. Here’s the summary:

- 🧠 They scale better: Huge model size with lower compute per inference.

- 🔌 They’re more efficient: Use only what’s needed per task.

- 🛠️ Tooling has matured: Easier than ever to build and deploy.

- 🧩 They match modern AI needs: Modular, adaptable, and multi-task-friendly.

- 🤔 They spark philosophical questions: Which parts of the model matter most?

This resurgence isn’t just hype—it’s a genuine evolution in how we think about AI systems. MoEs offer a smart path forward in a world where compute is costly, intelligence is complex, and adaptability is everything.

Use Cases: Where MoE Models Shine

- Large Language Models: The rumored architecture behind GPT-4 and DeepMind’s Gopher.

- Multilingual NLP: MoE is great for handling diverse languages by routing to specialized experts.

- Vision + Language Tasks: Emerging multi-modal systems are testing MoE to fuse visual and textual inputs with expert modules (Goyal et al., 2022).

🧠 Is MoE the Future or Just a Fancy Patch?

With all the buzz around MoE, it’s tempting to crown it the future king of AI architectures. But before we hand over the crown, let’s ask the tough question: Is MoE a fundamental shift in AI design, or just a clever band-aid on the problem of scaling deep learning?

The answer? It’s complicated—and fascinating.

🌉 A Bridge to Truly Scalable AI?

MoEs directly address a pressing issue: the growing cost of running large AI models. Dense architectures, where all parameters are used for every input, are hitting practical limits. MoEs offer a “pay-as-you-go” model of computation—scaling model capacity without scaling cost linearly.

As Noam Shazeer, co-author of the seminal Switch Transformer paper and co-founder of Character.AI, put it:

“Mixture of Experts is the only way to scale models indefinitely without making inference prohibitively expensive.”

This efficiency has huge implications for enterprise AI and public deployments alike. And as hardware gets better, MoEs are poised to take fuller advantage of conditional compute, making them even more attractive for large-scale applications.

🔁 A Pattern That Mirrors Nature

MoEs might not just be a performance hack—they may signal a deeper design pattern in intelligence. In nature, specialization leads to resilience. From ant colonies to the human brain, complex systems rely on distinct units performing focused tasks.

In this way, MoEs move us closer to modular AI, a long-standing goal in cognitive architectures. Meta’s AI Chief, Yann LeCun, has hinted at this direction in his own vision for AI:

“Intelligence is modular by necessity. Systems that learn and reason must specialize across tasks and contexts.”

By modeling different “minds” within one network, MoEs create a form of artificial division of labor—one that’s efficient, scalable, and eerily human-like.

🧠 MoEs in AI Agents & Personal Assistants

As we move toward agentic AI systems, capable of managing tasks across domains and contexts, MoEs offer a natural fit. Instead of having one-size-fits-all models, future AI agents may rely on task-specific experts: some for creativity, some for planning, others for emotional tone.

This idea aligns with the direction OpenAI is heading. In late 2023, CEO Sam Altman said:

“We imagine a world where your AI assistant can call on the right tools, models, or experts, just like you’d assemble a team for a project.”

That’s basically a job description for a Mixture of Experts model.

🔄 Or… Just a Transitional Tool?

Of course, not everyone’s sold. Some researchers believe MoEs are a stopgap—an evolutionary blip until the next paradigm takes hold. Newer architectures like state-space models, long convolutional networks, or even brain-inspired computing might make MoEs obsolete in a few years.

Still, MoEs offer valuable lessons, even if their time in the spotlight is limited. As Ilya Sutskever, co-founder of OpenAI, once noted:

“Every architectural breakthrough, even if temporary, leaves behind tools and intuitions that push the field forward.”

If nothing else, MoEs could influence future models to adopt conditional computation, modularity, and adaptive routing as defaults.

🧪 Research Is Just Getting Started

We’re just beginning to tap into the potential of MoEs. Areas like dynamic expert evolution, load balancing, and multi-modal routing are ripe for exploration. Already, teams at Google, DeepMind, and Meta are investing heavily in improving expert utilization, diversity, and training stability.

A notable voice in this space is Google Brain researcher Barret Zoph, who stated:

“MoE unlocks capacity that we didn’t know how to train efficiently until recently. It’s not just about speed—it’s about unlocking new behaviors.”

That framing is powerful. MoEs aren’t just lighter models—they’re smarter systems with more interesting behavior.

🔮 So… Are MoEs the Future?

Here’s a quick perspective table to help you decide:

Final Thought: Not Just a Patch—Possibly a Blueprint

MoEs aren’t just a trick to stretch existing models—they could represent a blueprint for how intelligence organizes itself. Modular, context-aware, efficient. Whether they evolve or fade, the philosophy behind MoEs—use the right part of the brain for the job—is likely to remain a core principle of intelligent system design.

Or as Sam Altman put it:

“The future of AI is about orchestration. Not just how smart a model is, but how well it knows when to ask for help.”

That sounds a lot like a Mixture of Experts.

📚 Reference List (APA Style)

- Fedus, W., Zoph, B., & Shazeer, N. (2022). Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity. Journal of Machine Learning Research. https://arxiv.org/abs/2101.03961

- Shazeer, N., Mirhoseini, A., Maziarz, K., Davis, A., Le, Q., Hinton, G., & Dean, J. (2017). Outrageously Large Neural Networks: The Sparsely-Gated Mixture-of-Experts Layer. arXiv preprint. https://arxiv.org/abs/1701.06538

- Du, N., Hou, Y., Shwartz, V., Rush, A., & Cardie, C. (2022). Gating in Mixture-of-Experts: Understanding and Improving Expert Utilization. arXiv preprint. https://arxiv.org/abs/2209.15045

- Lepikhin, D., Lee, H., Xu, Y., Chen, D., Firat, O., Huang, Y., … & Chen, Z. (2020). GShard: Scaling Giant Models with Conditional Computation and Automatic Sharding. arXiv preprint. https://arxiv.org/abs/2006.16668

- Roller, S., Dinan, E., Ju, D., Williamson, M., Liu, Y., Shuster, K., … & Weston, J. (2021). Open-Domain Chatbot with Multi-Expert Architecture. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP).

🔗 Additional Resources

These are useful for hands-on exploration, deeper dives, and keeping up with the evolving MoE ecosystem:

- 🔧 DeepSpeed-MoE (Microsoft)

https://www.deepspeed.ai/tutorials/mixture-of-experts/ - 🧪 Fairseq-MoE (Meta AI)

https://github.com/pytorch/fairseq/tree/main/examples/moe_lm - 💻 Google GLaM (Generalist Language Model)

https://ai.googleblog.com/2021/12/pathways-language-model-glam-scaling-to.html - 📈 OpenAI Dev Day 2023 – Agentic AI and Modular Systems

https://openai.com/blog/devday-2023-keynote - 🗂️ Papers With Code: Mixture of Experts

https://paperswithcode.com/task/mixture-of-experts - 🔍 EleutherAI’s Research Chat on MoEs

https://www.eleuther.ai/blog/moe-research-chat/

📖 Additional Readings

Want to dive deeper into the conceptual and philosophical aspects of MoEs and modular AI systems? Here’s a list of suggested readings:

- “The Bitter Lesson” by Rich Sutton (2019)

https://www.incompleteideas.net/IncIdeas/BitterLesson.html

A classic piece explaining why scale—and by extension, efficient scaling—wins in AI. - “The Path Towards Autonomous AI Agents” – Andrej Karpathy (2023 keynote)

Outlines how modular and multi-agent AI systems will become the norm. - “Beyond Scaling Laws: Mixture of Experts and Conditional Computation” – Gradient Science Blog

https://thegradient.pub/conditional-computation/